OBJECTIVE

Current attitude determination systems on-board Earth orbiting, Nadir pointing spacecraft rely on Earth crossing

sensors which allow the detection of the Earth/atmosphere horizon and, hence, the Earth centre direction. This

information enables the computation of two attitude angles, while the angle about the sensor line-of sight remains

unknown, unless another source of attitude information is available.

The goal of this project is the development of novel standalone spacecraft attitude sensors, capable of estimating

the full three-axis orientation of an Earth-orbiting satellite. This will be accomplished by capturing from space a

sequence of images of the Earth surface (both in the visible and infrared bands) and matching some Earth surface

invariants (e.g. the land masses coastal lines) with a known model.

The project is organized in four clearly identified phases, all comprising two parallel activities, carried out by the

two subgroups having complementary expertise, in the field of hardware (H/W) and software (S/W) development.

Approach:

In the first phase, the effort of the research group will be devoted to the selection of suitable enabling

technologies which could allow the use of combined Visual/IR Earth sensors as standalone attitude sensing

hardware on board an Earth orbiting spacecraft. The underlying idea is that a camera looking down on the Earth

from space, at certain wavelengths, could identify and match the land masses coastal lines with a database

stored on-board, thus determining the usually unknown angle about the sensor line-of-sight. Thus, the key issue

to be investigated in this study is the identification of the most appropriate combination of Visual/IR hardware

components (sensors and optics) capable to unambiguously detect some features on the ground (different

temperatures of land and sea areas, mountain chains, etc.). This detection has to be performed at sufficiently

high spatial ground resolution (in order to guarantee a high angular accuracy) which requires proper

combinations of Field of View (FOV) and sensor dimensions and resolution (number of pixels - rows by

columns). The combination of a wide FOV to guarantee land presence in the image with a narrow FOV to

improve the resolution and, hence, the performances will be assessed. In addition, the analysis will identify

suitable sensor working wavelengths in order to deal with different local weather condition and to do away with

the overwhelming screening effect due to clouds or the atmospheric absorption and emission.

The problem of finding a match between the geographical areas of the Earth shot by the on-board camera and

the corresponding zones stored in the database belongs to the field of Pattern Recognition. Here, the most important

task is to identify the appropriate patterns, whether they are photometric, geometrical or mathematical features.

These must be robust to distortions, due to projection, and to partial occlusions, due to screening effect of the clouds

covering part of the frame. In addition, independently of the algorithms we can choose, we must not leave the final

H/W requirements out of consideration. Therefore, among all possibilities those reaching the best compromise in

terms of computational and memory requirements will be selected. Photometric feature points are simple to compute

and to be tracked. However, usually they are not robust to occlusions. Geometrical markers offer a high specificity

but they suffer from distortions. A classification approach based on Support Vector Machines (SVMs) should be

considered in order to build for each marker a class made of its probable distortions. Mathematical features could be

the Wavelet's coefficients, which are used for texture analysis, also in case of IR images. They could be heavy to

compute but they can exploit at best the information contained in the visible part of the frame, thus making the

recognition task easier. At the end, it is likely that the optimal solution will be achieved by using a combination of the

proposed techniques, each of them working in different contexts. Therefore, extensive experimental tests must be

carried out in order to find the best trade-off between efficiency and effectiveness.

Fig. 1: Summary of a Low Earth Orbit (LEO) system and controls.

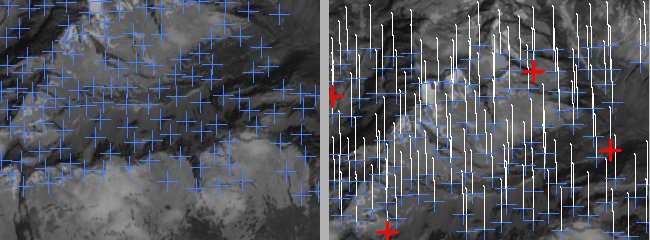

Fig. 2: Features identified and tracked between two acquired CCD test images along the LEO perturbated orbit.